OpenAI developed a new series of AI models that spend more time thinking before responding. They can reason about complex tasks and tackle more difficult issues than prior models in science, coding, and math.

OpenAI released the first installment of this series via ChatGPT and our API. This is a preview, so expect continuous updates and improvements. Along with this release, we are including evaluations for the future upgrade, which is presently in development.

What is OpenAI’s o1-preview?

OpenAI o1-preview, often known as Strawberry, is a pioneering series of AI reasoning models created to address challenging challenges in science, coding, mathematics, and other areas. This model, released on September 12, offers a substantial step forward in artificial intelligence capabilities, with a focus on improved reasoning and problem-solving abilities.

How It Works.

The o1-preview model is trained to spend more time “thinking” before responding, using approaches such as Chain of Thoughts and Self-Reflection. This approach mirrors human cognitive processes, allowing the model to fine-tune its reasoning, experiment with new strategies, and identify errors.

By completing multi-step reasoning and self-reflection internally, o1-preview can arrive at more accurate and dependable solutions without external cues to “think step by step.”

In a qualifying exam for the International Mathematics Olympiad (IMO), GPT-4o successfully answered just 13% of the questions, whereas the reasoning model earned 83%. Their coding abilities were examined in contests, and they finished in the 89th percentile in the Codeforces competition. You may learn more about this in our technical research article.

As an early iteration, it lacks many of the capabilities that make ChatGPT useful, such as web browsing and file and image uploading. For frequent scenarios, GPT-4o will be more capable soon.

However, for complicated reasoning tasks, this is a big step forward and marks a new level of AI capacity. Given this, we reset the counter to 1 and call this series OpenAI o1.

Key Achievements:

OpenAI o1-preview has shown tremendous progress in a variety of hard tasks:

Mathematics

In the qualifying exam for the American Invitational Math Examination (AIME), o1-preview completed 83% of the problems, which was far higher than GPT-4o’s 13%.

Coding

The model scored 89th percentile in Codeforces competitions, demonstrating its superior coding talents.

Science

On difficult benchmark problems in physics, chemistry, and biology, the model performed comparably to PhD students, obtaining 75-80% correct responses.

Comparisons to GPT-4o

The tables below highlight the performance improvements of o1-preview over GPT-4o:

Agent Evaluation and Safety

OpenAI introduced new review mechanisms to analyze the model’s autonomy, persuasive capabilities, cybersecurity applications, and potential catastrophic hazards. While o1-preview has low risk in cybersecurity and moderate risk in catastrophic scenarios, it excels at conforming to safety and alignment criteria thanks to its sophisticated reasoning capabilities.

o1-preview uses self-reflection to follow safety protocols, making it more resistant to jailbreaks and unwanted alterations. Traditional hacks and jailbreaks are less effective because of the model’s inherent safety checks and compliance with alignment rules.

Impact on Prompt Engineering

The introduction of o1-preview represents a paradigm shift in the way users interact with large language models (LLMs).

Simplified prompts

Users no longer have to create complex prompts or use prompt engineering approaches such as “think step by step.” The model automatically performs internal reasoning, which simplifies the user’s input needs.

Enhanced Understanding

The model can efficiently interpret concise, straightforward instructions, avoiding the need for detailed explanations or background.

Examples

Curious about how o1-preview approaches real-world coding and math problems? Explore these samples to see their remarkable reasoning abilities in action.

OpenAI o1-mini

OpenAI o1-mini is a speedier, more cost-effective version of the o1 series designed for coding jobs. It is 80% cheaper than o1-preview, making it suitable for applications that require reasoning without considerable world knowledge. While o1-mini does not match o1-preview in complicated reasoning, it excels at precisely creating and debugging complex code.

This model is available to ChatGPT Plus members and has a greater weekly message limit than o1-preview. It also provides a cost-effective solution for developers, though consumers must pay for the model’s complex internal reasoning processes.

Pricing and Accessibility

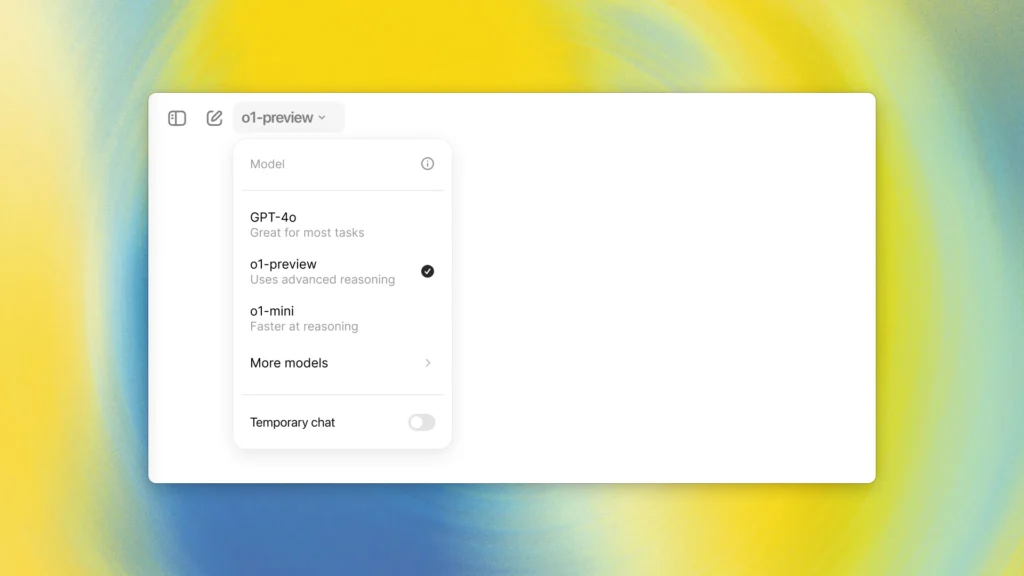

ChatGPT Plus Members

The model picker allows you to manually select both o1-preview and o1-mini. At launch, the weekly rate limit is 30 messages for o1-preview and 50 for o1-mini. OpenAI intends to boost these rates and allow ChatGPT to automatically select the best model for a particular query.

| Model | Weekly Usage Limit |

| o1-mini | 50 messages |

| o1-preview | 30 messages |

Developers

Developers who qualify for API usage tier 5 can begin prototyping with both models, with a rate constraint of 20 requests per minute. The API does not presently support function calls, streaming, or system messages. Developers can find more information about integration in the API documentation.

| Model | Rate Limit (per 1 minute) | Input Tokens (per 1M) | Output Tokens (per 1M) |

| o1-preview | 20 messages | $15.00 | $60.00 |

| o1-mini | 20 messages | $3.00 | $12.00 |

| GPT-4o | 10,000 messages | $2.50 | $10.00 |

Future Developments

OpenAI intends to enhance o1-preview with more capabilities:

- Upcoming features include searching the internet for information and uploading files and photographs.

- The model’s thinking time is predicted to expand from minutes to hours or days, allowing for more complicated issue-solving.

- Future updates may extend the model’s context beyond its present limit of 128k tokens.

How to Use OpenAI o1?

ChatGPT Plus and Team users will be able to access o1 models from today. The model selector allows you to manually select o1-preview and o1-mini. During launch, the weekly rate restrictions will be 30 for o1-preview and 50 for o1-mini.

working to raise those rates and automatically enable ChatGPT to select the appropriate model for a particular question.

For Further Reading:

Conclusion

OpenAI’s o1-preview represents a substantial development in AI reasoning models, providing higher performance in demanding tasks that necessitate profound reasoning and problem-solving abilities. The paradigm streamlines user engagement by reducing the need for complex prompt engineering, and it improves safety measures to prevent misuse.

AI models such as o1-preview will tackle increasingly complicated jobs, potentially exceeding human specialists in certain disciplines. AI reasoning models are expected to become integrated into a variety of industries, increasing productivity and innovation. As AI capabilities advance, ethical use and safety will become increasingly important, demanding strong norms and control.

FAQs:

-

How can o1-preview ensure safety and prevent misuse?

The model uses a novel safety training strategy that relies on its reasoning ability to follow safety standards and resist jailbreak attempts.

-

How many developers have access to o1-preview via API?

Developers that qualify for API usage tier 5 have limited access to o1-preview. For additional information, see OpenAI’s API documentation.

-

How does OpenAI o1- preview vary from previous models?

o1-preview is intended to spend more time thinking before answering, relying on internal multi-step reasoning and self-reflection to solve complicated problems more precisely.

-

Is o1-preview an alternative to GPT-4o in all tasks?

While o1-preview excels at reasoning-intensive activities like algebra and coding, GPT-4o may still outperform in areas that need substantial world knowledge or language variety.

-

Do I need to use any specific instructions to achieve the best results from the o1-preview?

No, the model is designed to understand simple, straightforward instructions without the use of prompt engineering techniques such as “think step by step.”